Multi Shape Objects

I wanted to load objects that were more than simple geometry. I experimented with more advanced heirarchical modelling, but it was too hard.

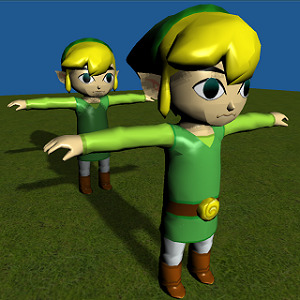

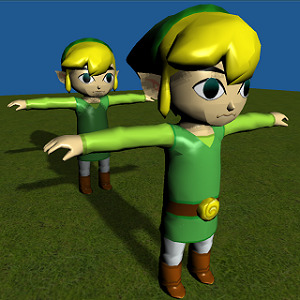

I then decided to just take my code and support multi-shape .obj files instead. One of the reasons why was because I wanted to look at some 3D model

that looked decent.

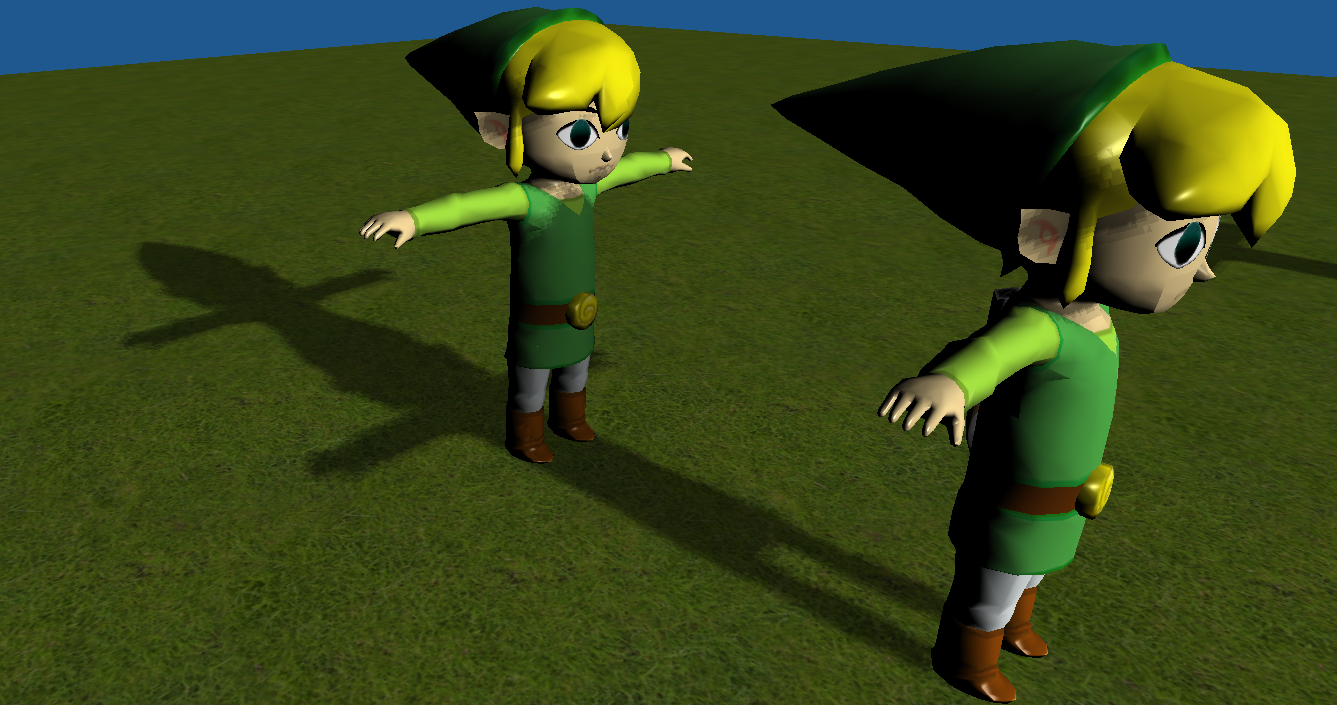

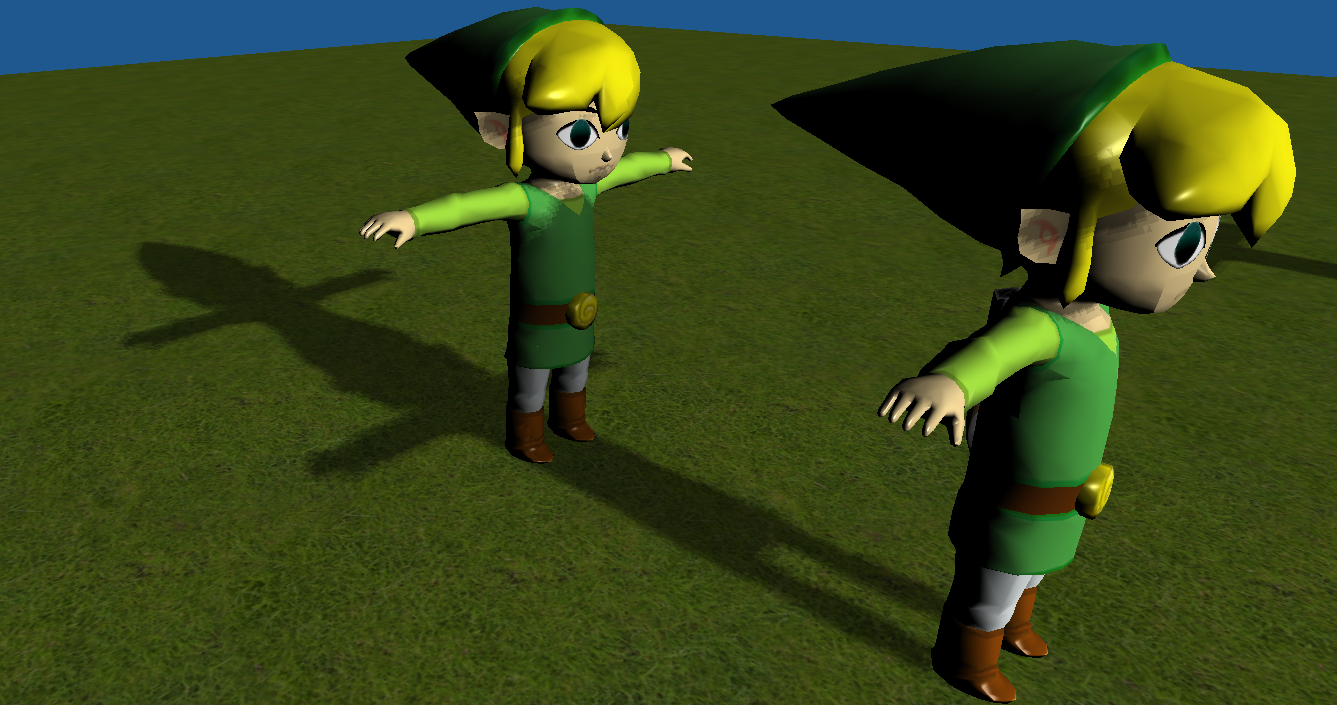

I found a

good looking ripped model of Link from

The Legend of Zelda: Wind Waker. The website provided the file in .obj format already, so I didn't need to convert formats.

The file is a multi-shape object that is textured by an accompanying .mtl file. Tiny Obj Loader will read the materials (thankfully), so my job

was to just design a data structure that contained all shapes, their textures, and the needed information to send them to the buffer.

map_d

The .mtl has two textures per material: a normal texture file and a texture file that contains the alpha values which should be used. Supporting this

in C++ was simple as keeping track of another texture, but there was a subtle rendering technique I wasn't aware. I turned on alpha blending, and used

the alpha texture to look up the alpha values for a fragment -- but rendering fragments with a 0 alpha doesn't really produce good results.

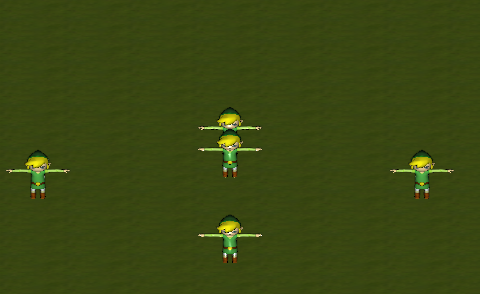

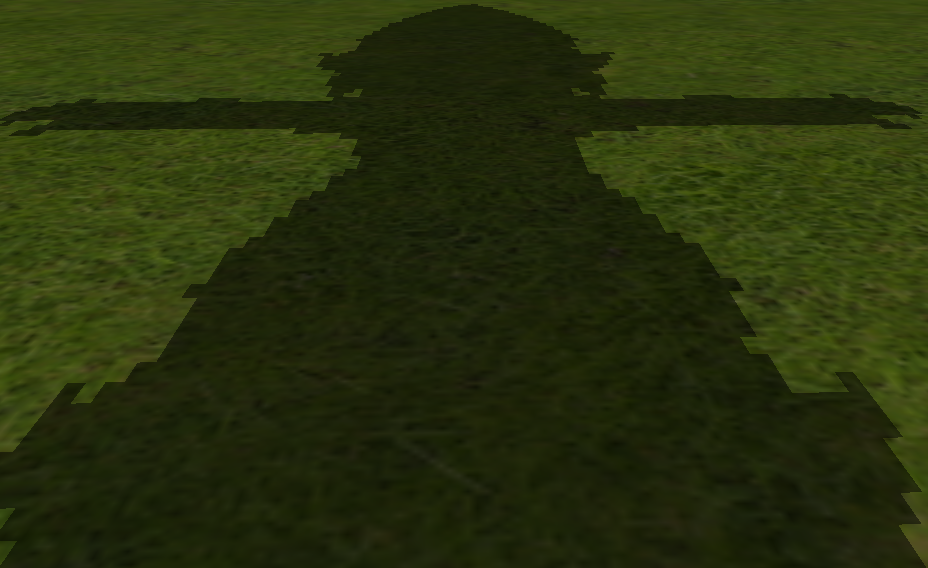

This is my fuck up. What's happening (as I later learned) is that the fragment is being sent to the GPU, the depth buffer is being set, and the geometry

behind Link's eyes is culled (whoops). What I had to do was to identify which fragments whould be transparent, and discard them. If they were discarded, the

depth buffer wouldn't be set, and the geometry behind the fragment won't be culled.

Shadow Maps -- Or How I Learned to Hate Graphics Programming (Even Though Graphics is a Cool Guy)

The next thing I wanted to do for my project was shadowing. Shadows are cool because they're a hard problem to solve. How do you know if some geometry is

occluded by others? And by what? This problem cost me sleep and I had to dig into it.

I looked up how shadow maps worked, and looked up some exaxmple code to see how they are implemented. A texture is attached as the depth buffer to a

frame object, perspective and view matrices are calculated for the light's position and direction. The perspective matrix must be calculated so that all

fragments rendered to the frame object are all fragments illuminated by the light. The view matrix must set the negative Z-axis in the direction of the

light.

Shadow maps for point lights are difficult to calculate because they require a cube shadow map. Six different depth buffers have to be calculated to

contain all fragment lit by the light. That's hard, and directional lights were the easiest to implement with my point light code. Because a directional

light colors all fragments in the geometry, an orthographic projection is needed to render all fragments lit by the light. The view frustrum should be

large enough to contain all these fragments.

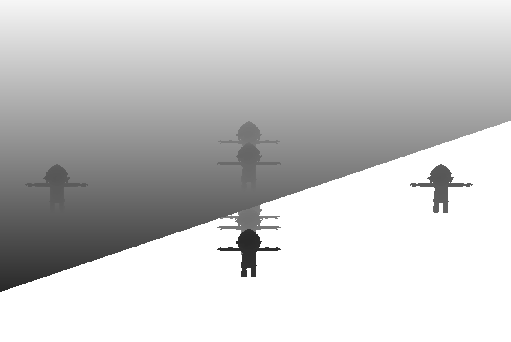

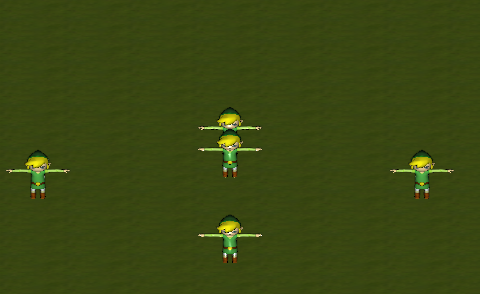

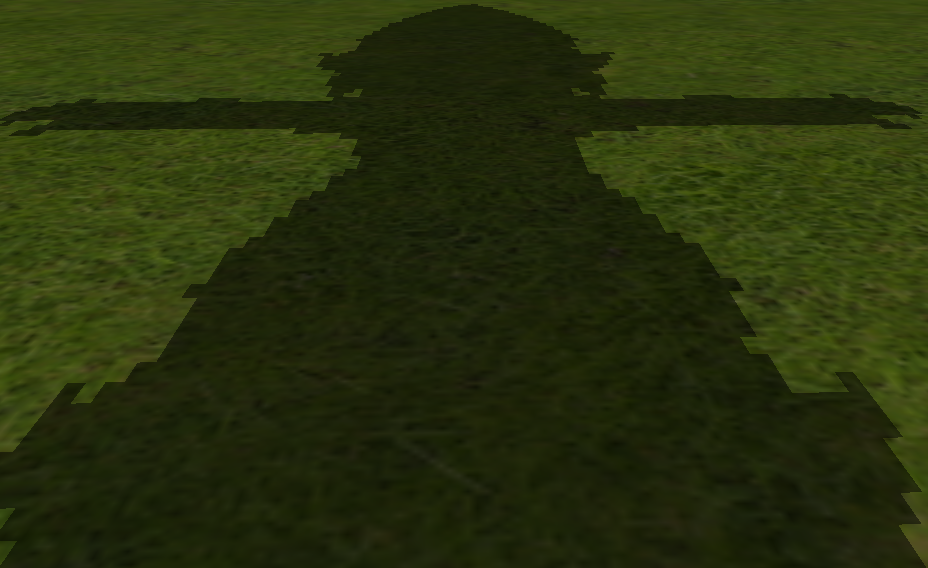

SCREENSHOT FROM CAMERA's PERSPECTIVE

In the above screenshot, you can see the scene rendered form the light's point of view. The depth buffer saves the depth of each fagment, and because

we attached a texture as that depth buffer, we can calculate any fragment's position in the light's coordinate system, sample the saved depth buffer, and

determine if the fragment is occluded by other geometry. If it's deeper than the depth saved in the buffer, it must be occluded by other geometry and

should be in shadow.

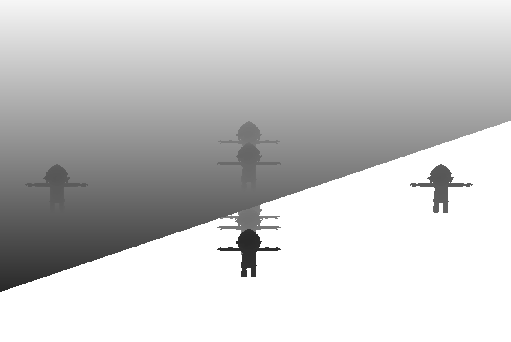

Fixing Garbage Aliasing

The depth buffer works pretty well, it's fast and the depth check is simple. However, the shadows look badly aliased because many fragments are competing

for limited space in the depth buffer. A naive solution is to increase the resolution of the depth buffer.

Larger depth buffers are much slower than smaller depth buffers, and while the alias is reduced, the shadows are too "sharp." This can be fixed using

something called Poisson sampling.

Poisson Sampling

Poisson sampling is done by sampling the depth buffer multiple times per fragment, each sample is offset slightly from the fragment's light coordinates.

The distances are usually constant, and I copied these constants from a web page I found online.

The resulting shadows look pretty good.

These were the albums I listened to while working on the last homework assignment I'll ever have:

Strawberry Jam by Animal Collective

The Mouse and the Mask by Dangerdoom

Remain In Light by The Talking Heads

To Pimp A Butterfly by Kendrick Lamar

OGRE YOU ASSHOLE by OGRE YOU ASSHOLE

Niggaz on the Moon by Death Grips

AKUMA by ALEX & TOKYO ROSE

Innerspeaker by Tame Impala

Tsumanne by Shinsei Kamattechan