Nefertiti v. Kinect

An

investigative graphics project by John Alkire

This

project is an investigation of the Great Nefertiti 3D Model Heist of ’16.

In

brief, two German artists used a Kinect to covertly scan the bust of Queen

Nefertiti in the Neues Museum Berlin. Their motivation: “to make cultural objects

publicly accessible.”[1]

However,

some individuals in the field of graphics have called into question the

validity of this narrative.[2] They have voiced many

concerns over the feasibility of the Kinect’s capability to produce the

detailed model under the conditions of the museum.

Exhibit A: The Shared Model

Because

the artists did not thoroughly describe their approach, information had to be

gleaned from the video they posted. Key takeaways/assumptions:

-

The

Kinect was strapped vertically to someone’s chest

-

The

Kinect did not receive continuous input of the statue but rather was repeatedly

covered and uncovered

-

The

statue was at a similar height to the Kinect

Exhibit B: The Kinect

museumshack from jnn on Vimeo. Exhibit C: The Video The

second website cited, amarna3d, provides a detailed theoretical explanation of

the inconsistencies with this story. For this project, I focused on two sources

of doubt: -

The

capability of an original Kinect to generate extremely detailed 3D models -

The

difficulties of piecing together Kinect data from multiple angles without

continuous visibility I

identified two technologies to investigate, Skanect and Microsoft Fusion. Skanect

is a standalone piece of software which can use the Kinect for 3D

reconstruction. This software proved quite impressive at recreating my test

objects—enough to suggest that the Kinect has the capability of producing an

object of the caliber of the Nefertiti data. However, it requires continuous

input from the Kinect and provides no option for using multiple sources of

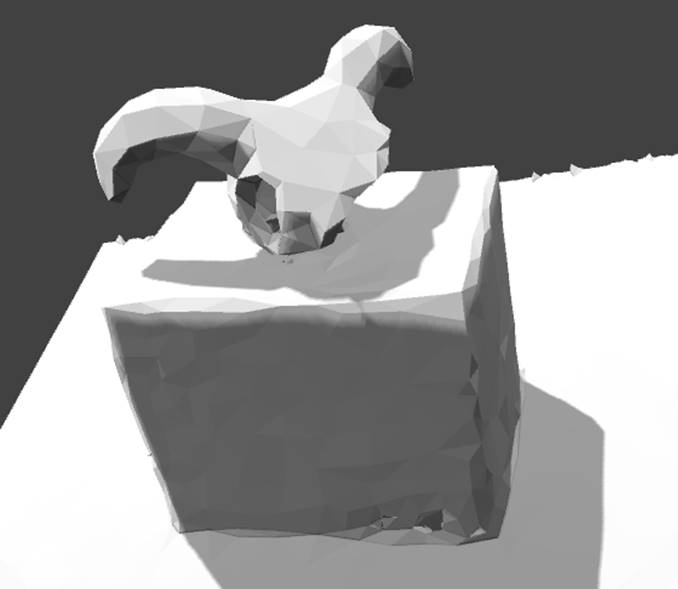

data. Exhibit D: A Skanect Model (note that the free version

of Skanect limits output polygons. The preview for this appeared much more

detailed) Kinect

Fusion is Microsoft Research’s 3D reconstruction API. Fusion is freely available

as part of the Kinect SDK and has been involved with multiple academic papers.[3] [4] I modified Microsoft’s

Fusion sample program to allow for non-continuous capture and preset Kinect

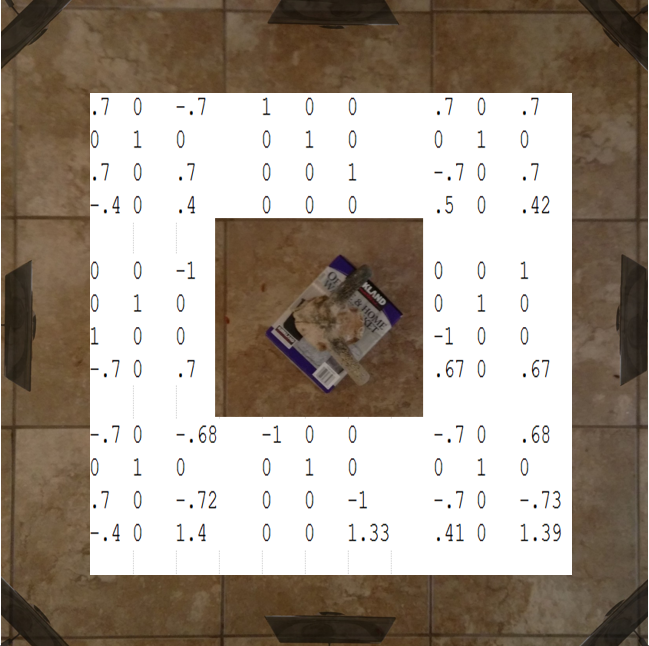

positions. Exhibit E: Kinect Positions and

Matrices I

used Fusion with continuous data capture to gather data on how it keeps track

of the Kinect’s position in the world. I created transformation matrices which

corresponded to eight positions on a square around the object. By toggling

through these matrices as I moved the Kinect to the corresponding positions, I

was able to gather data from 360o around the object and use Fusion

to put this into a 3D reconstruction. The fact that this worked at any level is

essentially the end of my success—the model produced was quite noisy and inaccurate.

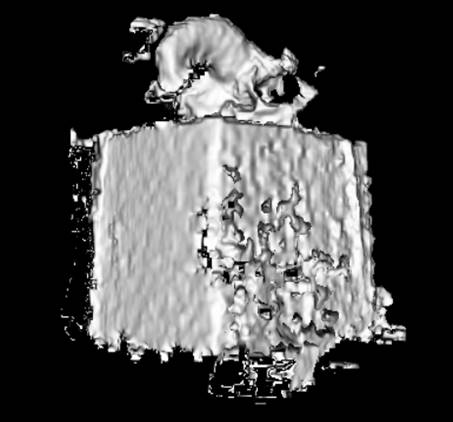

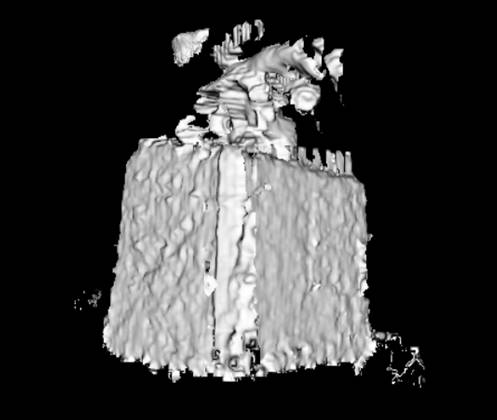

Exhibit F: Fusion Reconstructions

from Multiple Angles The

reasons for this became quite clear while I was working with the Kinect—my

world position matrices were ideal with numbers which corresponded exact angles

and tilts. The matrices produced by Fusion with continuous data contained many

small decimals corresponding to the Kinect’s actual angle and position. It was

simply not possible for me to position the Kinect as precisely as was necessary

to get solid data. Is

there a different approach which may have allowed for less precise positioning?

Possibly, but I could not figure out a way to provide the Kinect with an

approximate position. Accordingly,

my scientific conclusion is that this “experiment” suggests that Kinect Fusion

could not have feasibly been used for the Nefertiti data collection without

significant and highly technical additional programming. My

personal opinion conclusion, however, is that the Kinect was not used for this

data heist. Using a slow moving Kinect two feet away from the subject, Skanect

was able to produce a high quality model (though not even that was at the

released data’s level of detail). Add in glass, a crowd, no y-axis movement (as

the Kinect was tied to a chest), greater distance, AND no continuous video

footage, and the possibility of such a venture succeeding seems less and less

possible. There

are, though, some easily imaginable ways that the heist could have been real.

First and foremost, the artists could have used far more footage than the video

suggests—I was simply assuming that they were unable to get close, continuous

Kinect footage because that is how things appeared in the video. Additionally,

I am no Kinect expert. Perhaps someone with much more skill and time could have

pieced together various pieces of Kinect data. Hence, nothing has been proven

by this investigation. The reader must simply make their own slightly more

informed conclusion after seeing the limitations I experienced with Fusion.