My final project is an Audio Visualizer Engine. The difference between my audio visualizer engine and any old audio visualizer is that I was able to create a system that allows multiple audio visualizers to be created, and for them to be played one by one.

This project was comprised of three main parts:

In this class we've learned there's 3 things necessary to create a scene: geometry, lighting, and a viewport. The first part of this project was to abstract these 3 components and add them to a World class. I then created a Render class that could take in any World and render it to the scene. This mass abstraction allowed me to create various Worlds, or in this case audio visualizers, and seemlessly swap between them in real-time. This system can be expanded beyond audio visualizers to include any graphics project.

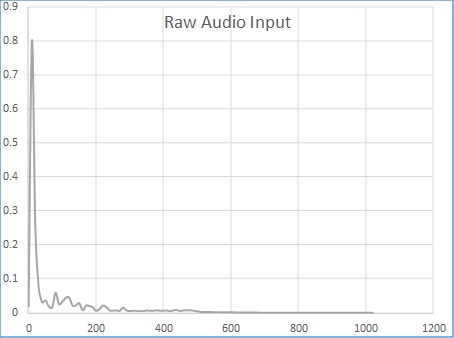

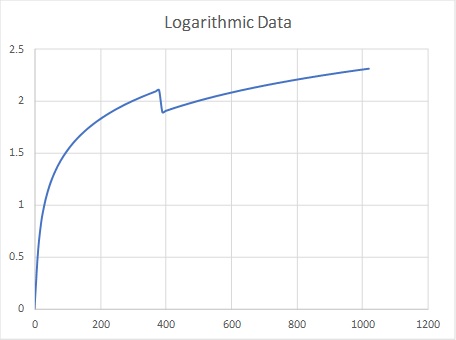

Audio data is read using a library called FMOD which uses FFT - Fast Fourier Transform - to take in audio data. However, FFT audio data comes in as frequency in hertz, and in any given song there are far more sounds in the low-frequency range than in the high-frequency. I used a logarithmic function for my audio data to be uniform. The graphs of the various audio data can be seen below.

My project includes two audio visualizers. The first one is a very basic one just to get the ball rolling and the second, alhough more dynamic, is far less compelling.

The first Audio Visualizer World was simply a line of cubes that would scale according to the audio input data. The fragment's red color is increased as it reaches the top of the window.

The second audio visualizer I worked on took some time. I wanted to dynmically create a rectangular prism that was made of segments. Each segment would scale to the audio and move indendent of the other segments so it would create 'Ribbon' that bounced to the music.

To create the ribbon, I first started with a base rectangle and I would then manually add vertices, faces, and normals to it. These segments were then individually scaled with the audio input to create a waving ribbon.

Unforuntaley I was unable to finish finalizing the normals of the ribbon as well as the movement. I plan to complete that soon. I want to use Perlin Noise to move each of the ribbon's segments indepdently so that they seem like they're flowing through space.