Volumetric Ray Tracing of Smooth Particle Hydrodynamics

473 Final Project

Paul Armer - parmer

Suzie Marano - smarano

This dyanmic duo set forth to ray trace the smooth particle hydrodymics of Suzie's thesis (which is attempting to model hair as a fluid). Our goal was to determine whether or not volumetric ray tracing was a valid method to analyze the output of Suzie's particles.

Overview

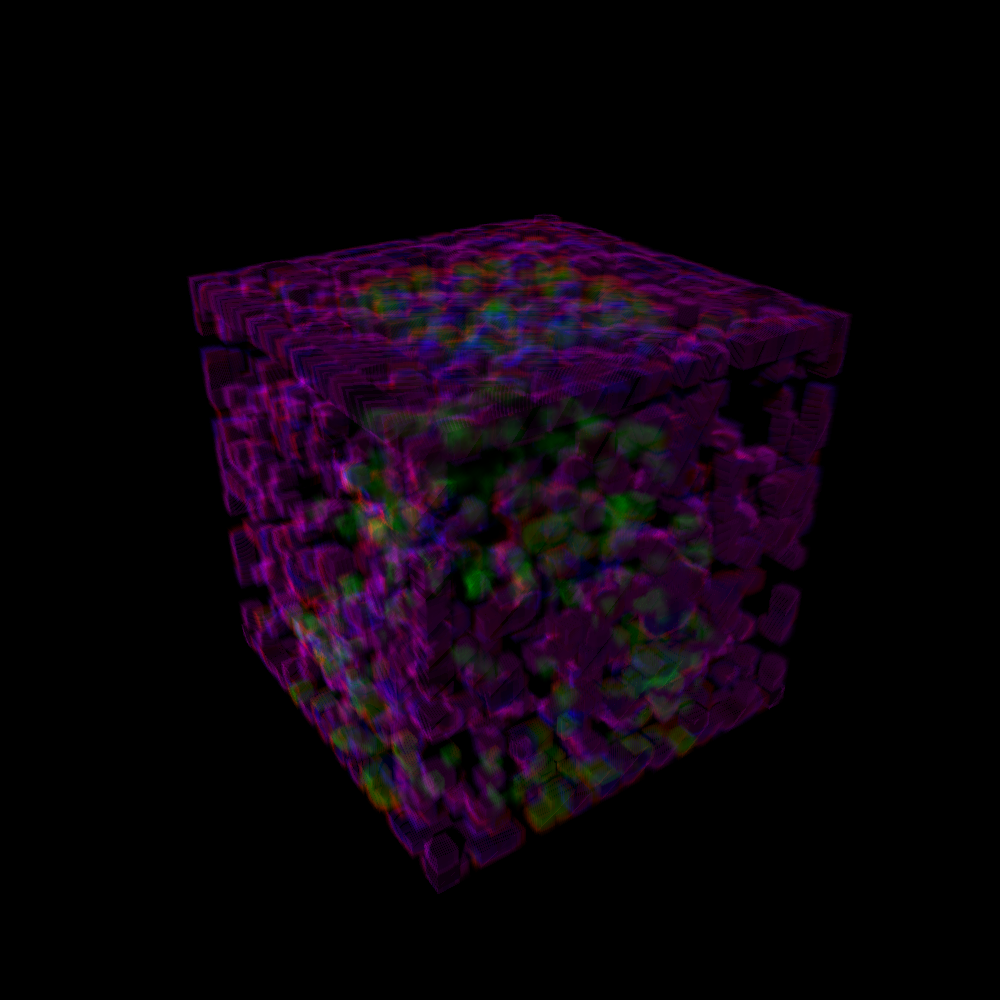

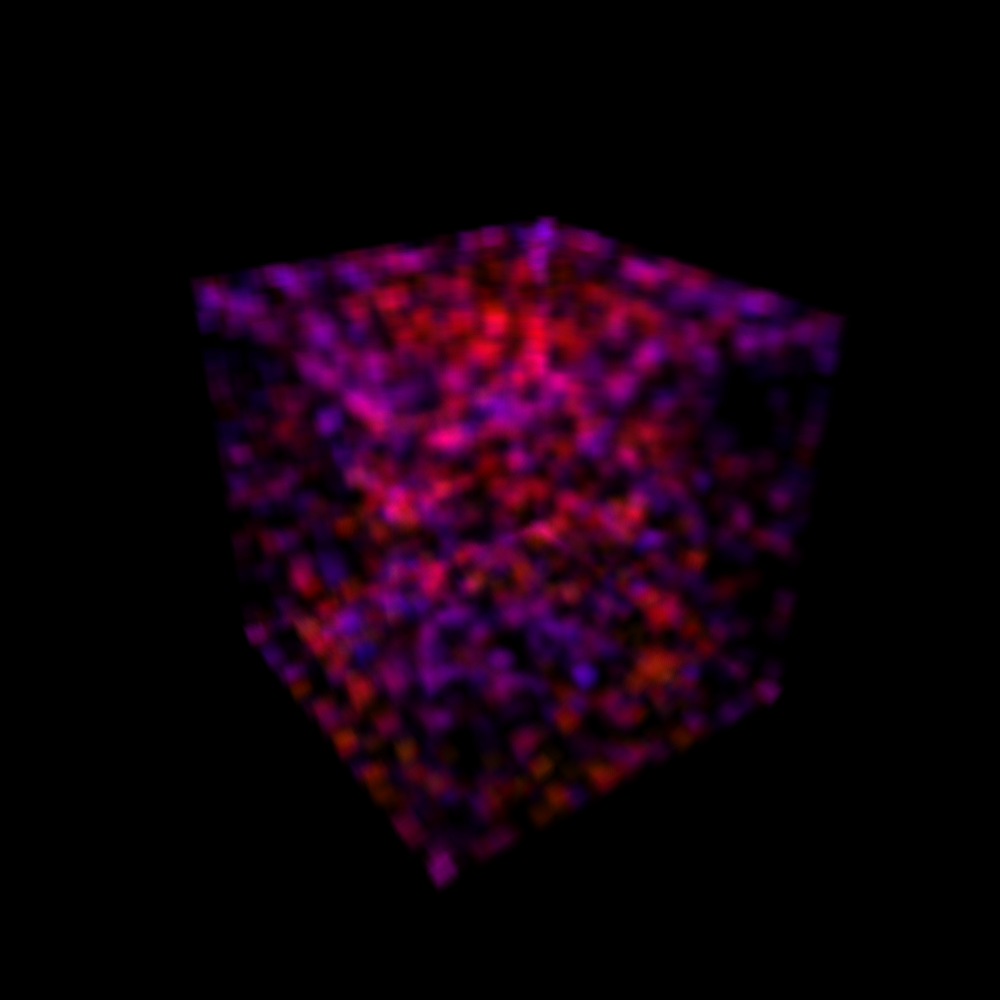

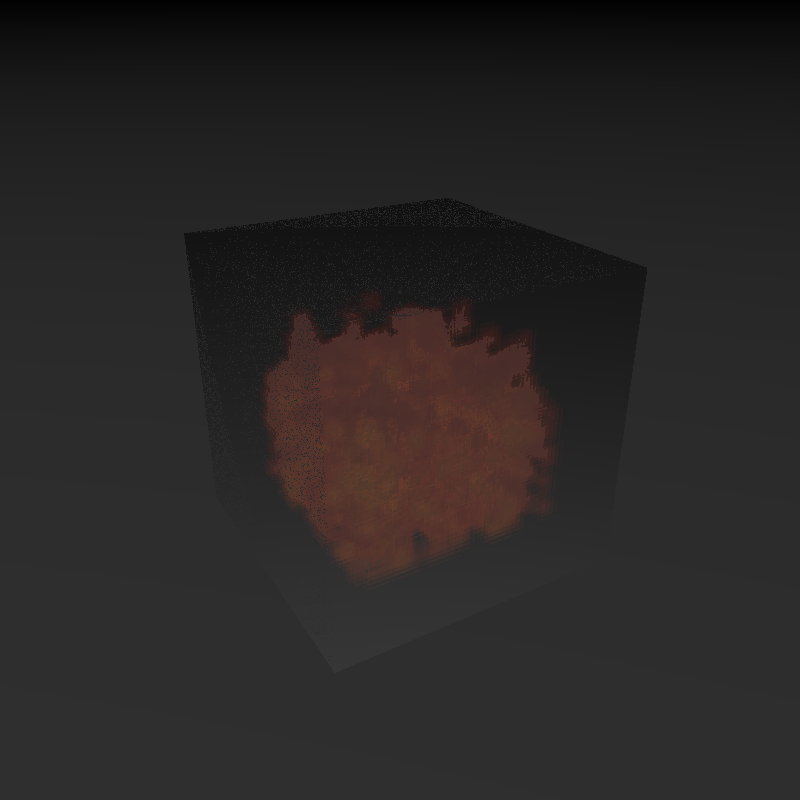

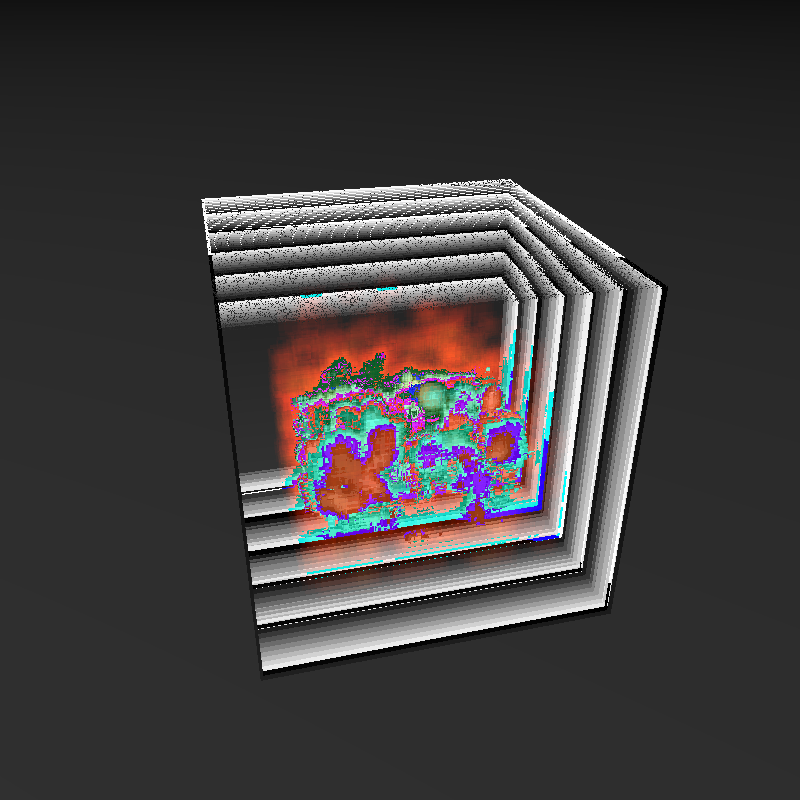

Our ray tracer sends out viewing rays as a normal ray tracer would, however once we intersect with the voxel grid we increment the ray through the grid collecting color as we go along. As walk through the voxel grid we collect an alpha value, that represents the transparency of that voxel, and once we reach our threshold opacity we return that color.

The color of the voxels are determined by the data we wish to collect. We can have each voxel be colored by the density, the pressure, or acceleration of the particle that is in that voxel. We can also change the alpha value of each voxel depending on the pressure or density (since those are scalar values).

Technologies

We used our ray tracer as a starting point, which made the task of rendering a volume seemingly easy. As we eventually discovered, however, the matter of ray tracing a volume verses a surface is quite different. Both use the idea of shooting rays into the scene, but little else after this point is the same. As disscussed in the Screw-Ups section, we attempted a volume tracing method that utilized more aspects of our original ray tracer, but this was eventually abandond for a much simplier method that provided much better results.

The final version of our implementation functions as follows:

- Cast a fat ray from the camera and see if it hit the volume. If the volume was hit:

- Determine which voxel the fat ray hit/stopped in.

- Update the color contained in the fat ray using the color and alpha value of the voxel.

- Advance the fat ray forward by less than a half of the voxel size.

- Repeat parts 2-4 until the fat ray exits the voxel or the fat ray's alpha value has reached a threshold.

Our volume is contained in a box allowing us to use a box interestion test from our origional ray tracer to accomplish Part 1. Then, in Part 2, the first voxel to be hit in the volume by the fat ray is easily found by converting the intersect point into indexes of the three-dimentional array containing the voxels. The color of the voxel is then multiplied by its alpha value and added to the fat ray's color in Part 3. In Part 4, the fat ray is advanced so that the process can be repeated for a position deaper in the volume.

Given this basic implementation, we wanted to add shading to the volume. In order to do this, each time we stepped through the volume, we cast a fat ray from the current position to the light. We refer to this ray as a shadow ray because it does that portion of the voxel from the light. We then incremetally follow this ray through the volume, similar to the basic implementation. As we step through the volume to the light, however, we subtract the voxels color from the fat ray's color rather than adding it. Once the shadow ray has made it through the whole volume to the light, its total color (expected to be negative) is added to the color of the light and then multiplied by the color found in Part 3 of the basic implementation.

Parsing the volume file takes up a somewhat significant portion of the total run time. This time could easily be reduced by defining a more condensed file format.Specs

94 x 94 x 94 grid

- 1000 x 1000 image

- No shading

- 7 seconds

94 x 94 x 94 grid

- 1000 x 1000 image

- Shading

- 590 seconds

170 x 170 x 170 grid

- 640 x 640 image

- No Shading

- 15 seconds

170 x 170 x 170 grid

- 800 x 800 image

- Shading

- 15 seconds

Animation

- 640 x 640 image

- No Shading

- 15 seconds per frame

- 100 seconds to parse each frame

- 5.5 GB of data

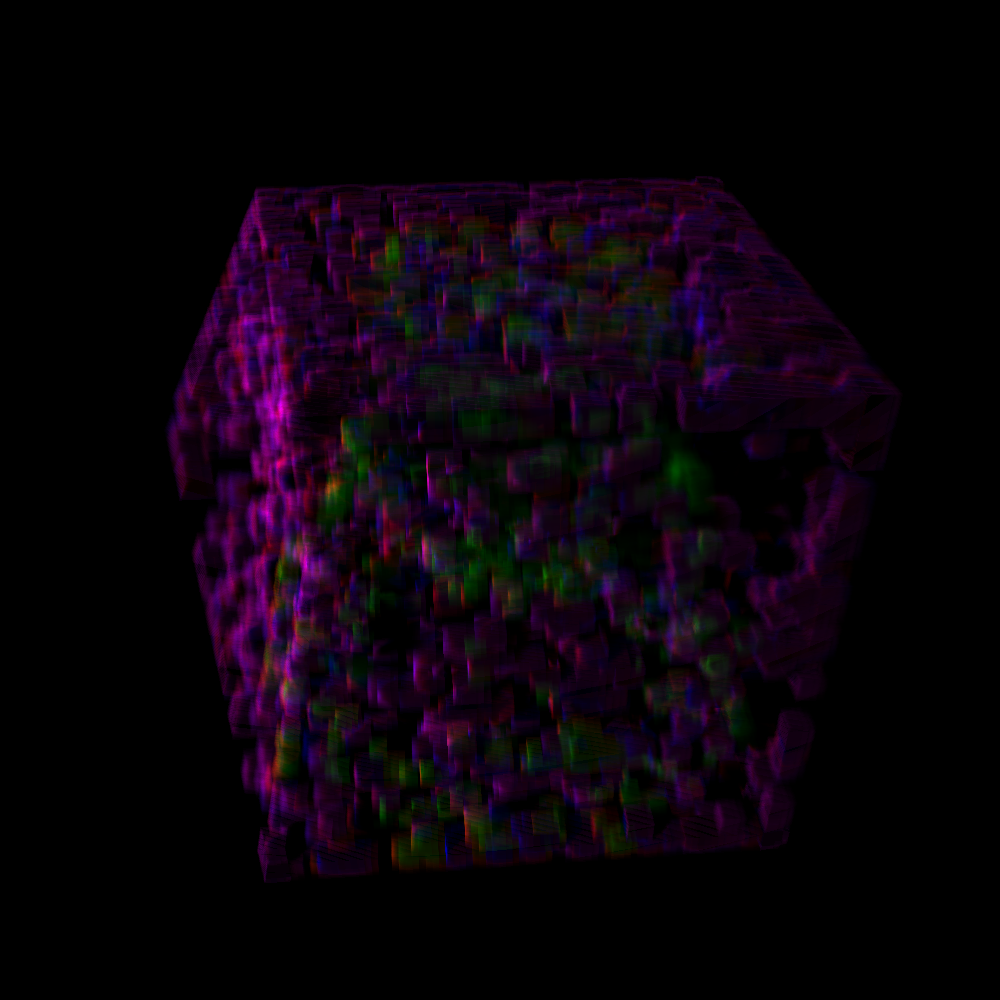

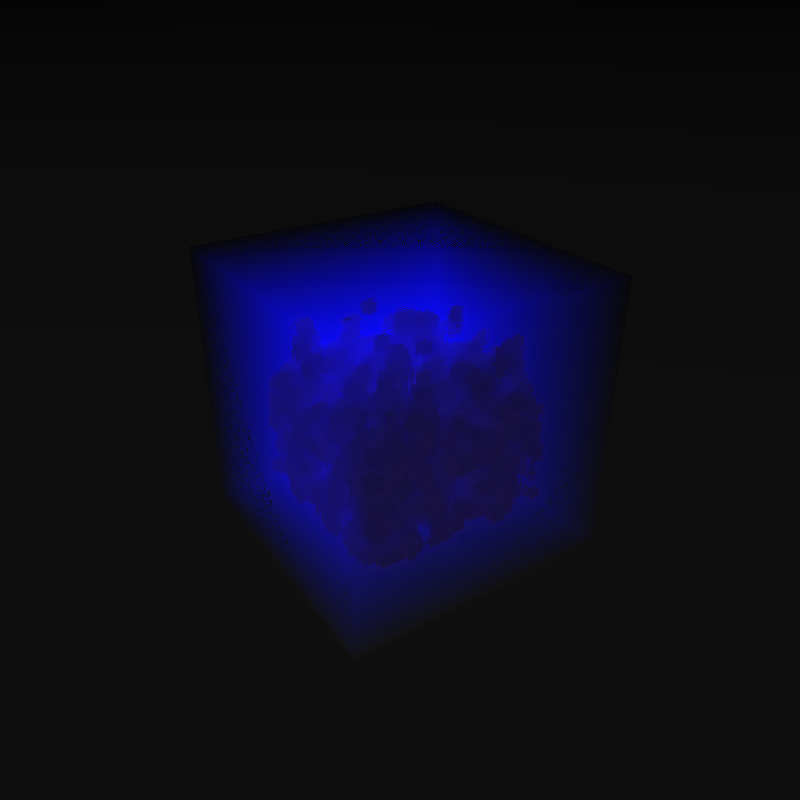

Renders

Screw-Ups

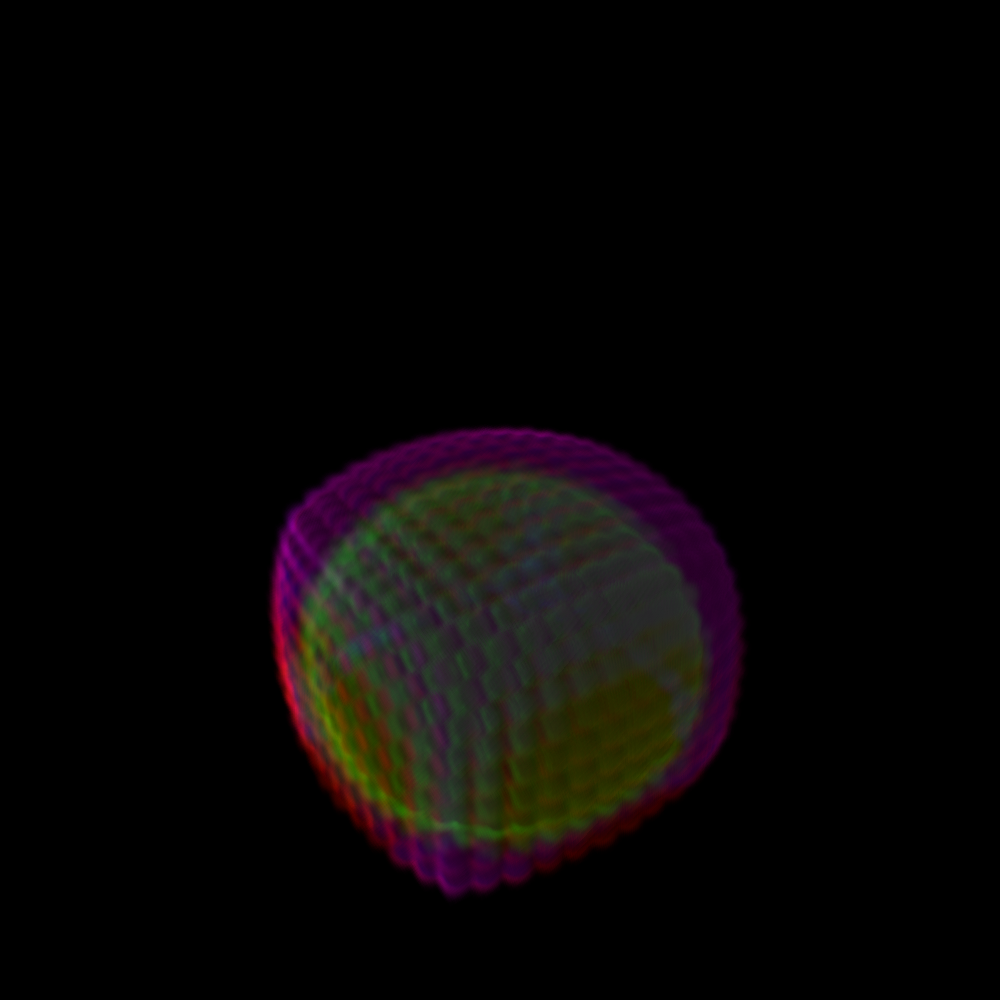

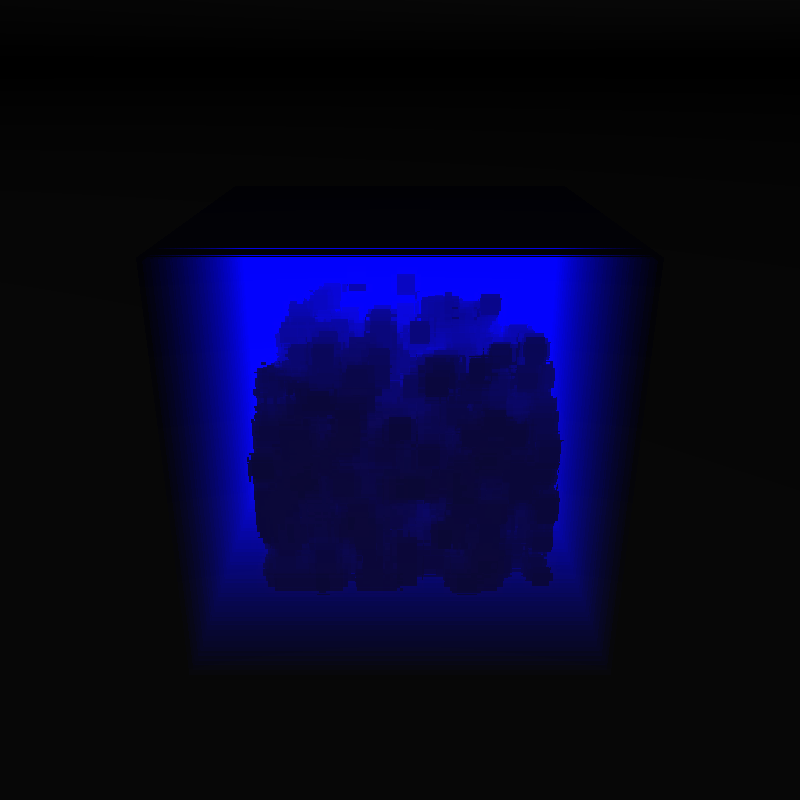

Half way through our project we realized that our algorithm was very wrong and inefficient. At each voxel intersection we were doing a ray-box intersection test with that voxel's neighbors. While a relatively simple algorithm, this actually ends up causing more code, and more room for error. As the t parameterization increases so does the floating point error. This lead to some interesting bugs, notably a speckled image as well as weird plane issues.

We then realized that a voxel grid doesn't have overlapping items and this given a point in world space, we could find whether or not we were in the voxel grid, and what exact voxel we were in. So we then switched to the simpler algorithm in which we increment our ray a little bit, and collect the data for the voxel we currently reside in.

Nonetheless, our faulty algorithm made some pretty interesting bug images:

References

- Smooth Hydrodynamic Particles Paper - Suzie's inspiration for her thesis

- 473 Final Project Review - where we realized our algorithm was wrong.

- SlideJS jQuery Plugin