Description

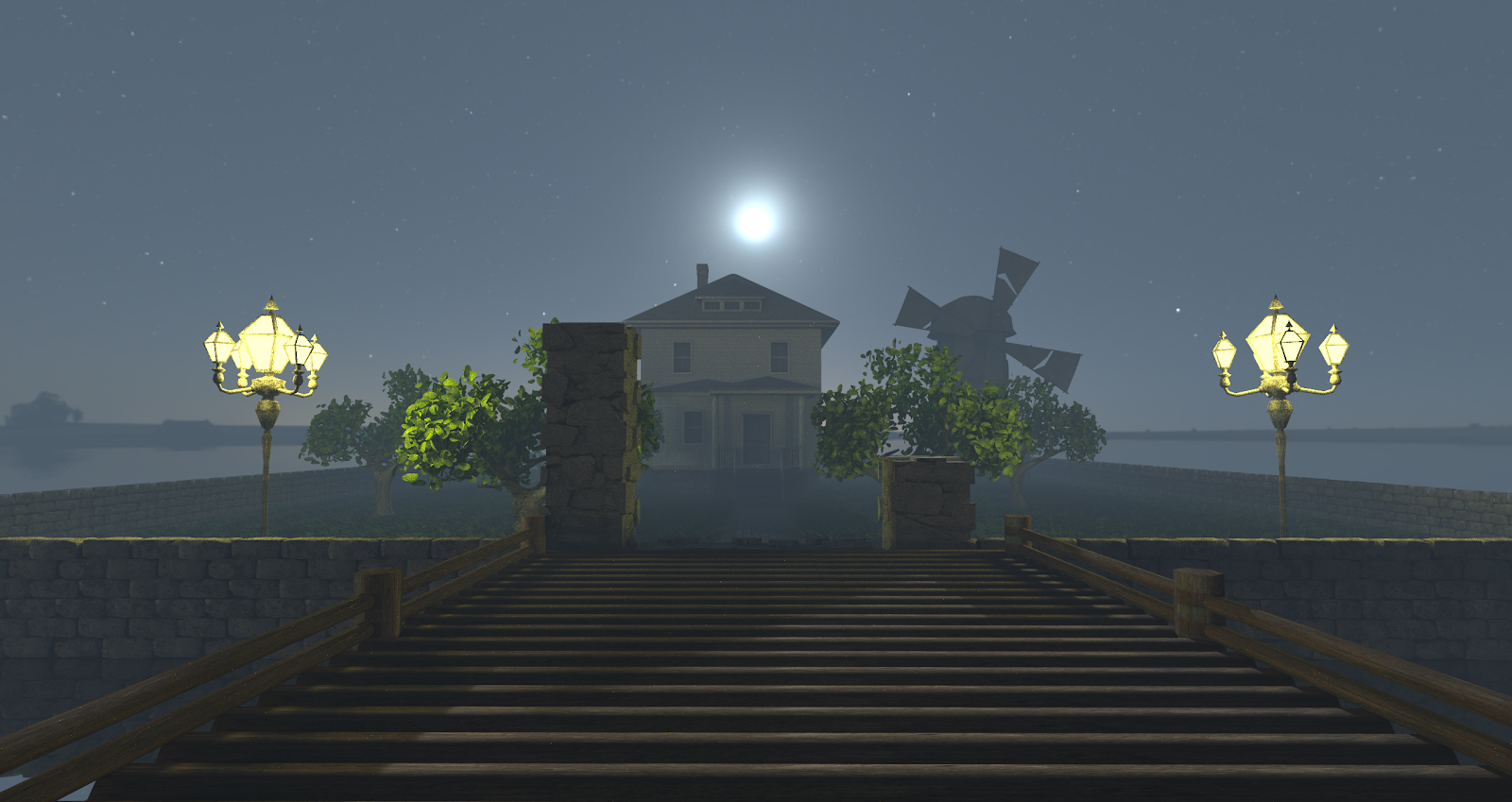

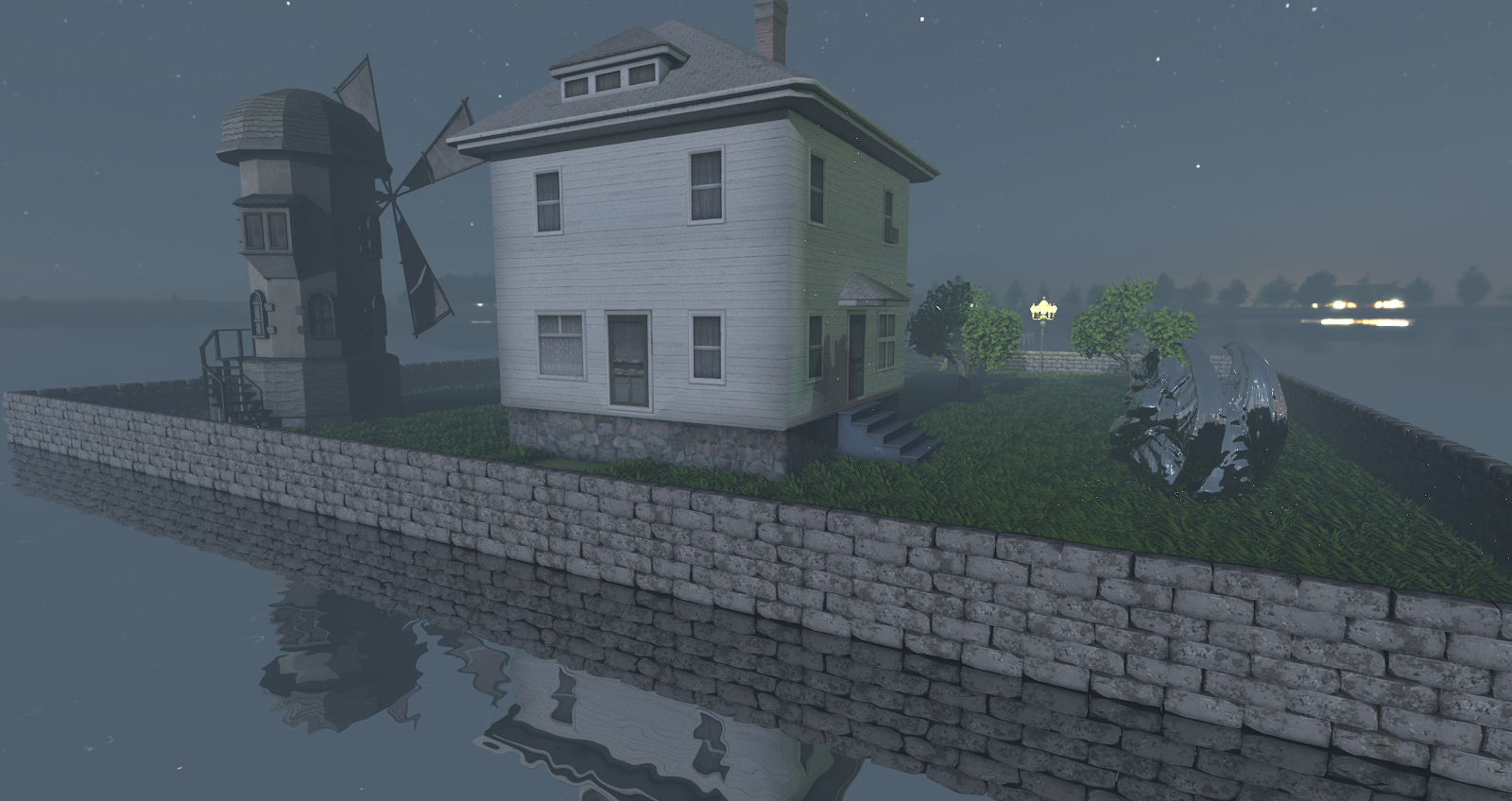

The goal of this project was to create a real-time raytracer capable of rendering a realistic-looking lakehouse on a foggy night using OptiX.

Feature summary:

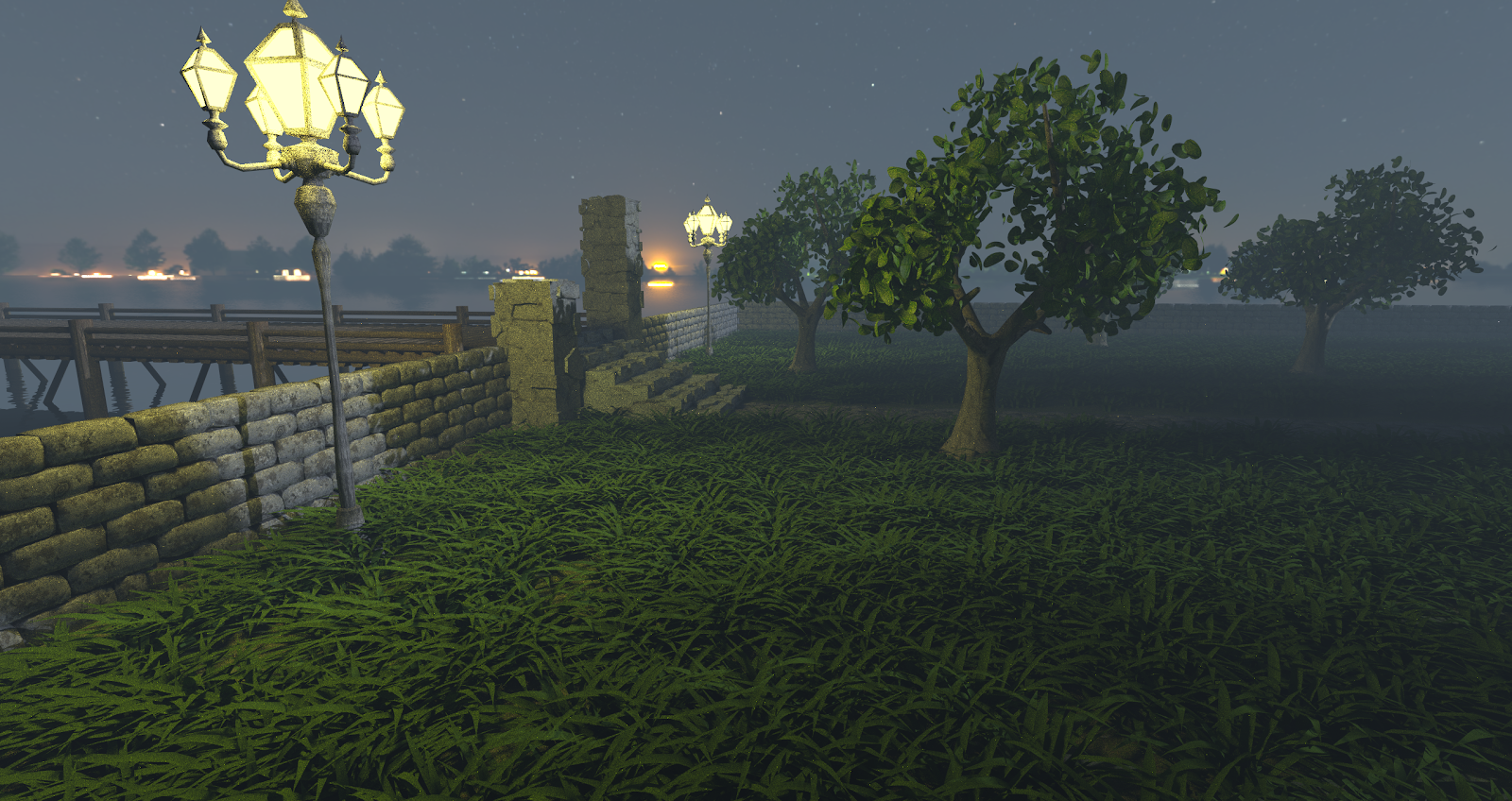

- Diffuse and specular materials

- OBJ+MTL file loading and rendering

- Texture mapping

- Environment sampling

- Triangle lights

- Importance sampling for direct lighting

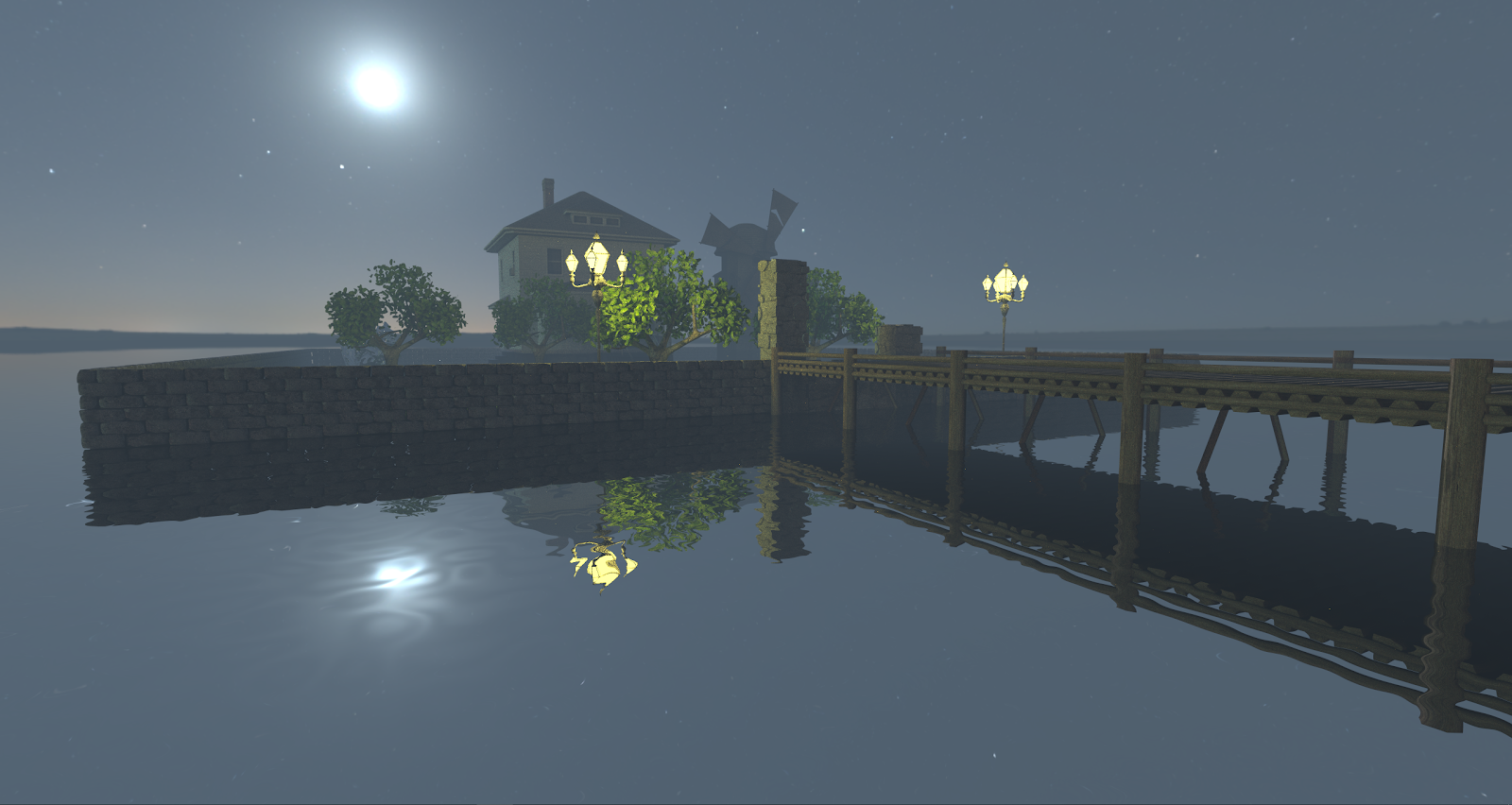

- Ripply water

- Fog (non-volumetric)

Demo

Download videoGallery

Implementation

I started with NVIDIA's OptiX 7 intro sample. This provided a basic implementation for adding materials, sampling from an environment map, and texture mapping. For brevity, I'll be focusing on the interesting challenges I faced rather than going into detail about OptiX features or the NVIDIA sample's implementation.

I started by adding OBJ and MTL file support in order to load custom meshes with material properties. NVIDIA's sample implementation

only supported per-mesh materials, while OBJ files may contain per-face materials, which are a necessity for some assets (like the lamp mesh I used,

which had emissive glass panels). As such, I added a FaceAttributes struct that described the characteristics of a triangle, similar to

the way VertexAttributes describe vertex data (position, normal, texcoord, etc.). The GeometryInstanceData struct, used by the shader binding

table to describe instanced geometry, contained a pointer to an array of FaceAttributes so they could be accessed from the shaders. This enabled

shaders to collect special properties given a specific triangle, like if it has a texture or is emissive.

Since material properties exist per-geometry, not per-instance, emissive faces had to be handled in a special way. When calculating direct lighting for

a diffuse surface, the closesthit shader selects a random light in the scene to sample. Since the number of lights in the scene depends on the number

of instances of geometry with emissive triangles, scene lights are generated in a second pass after each instance has been created.

The main challenge with lighting came from the fact that there ended up being 161 light sources in the scene (80 per streetlamp mesh, plus the environment map). If we randomly sample a single light source per diffuse surface hit, it takes a long time for the noise to converge. Simply increasing the sample count dramatically kills performance. To solve this problem, my implementation samples lights that contribute the most to the scene more frequently. Since the vast majority of the light comes from the environment map, it has a high chance of being sampled for direct lighting. Each triangle on the streetlamp, contrarily, has a rather low probability. This results in an image that converges more quickly at virtually no performance cost.

Ripply water could have been handled in multiple ways. I chose to put my implementation in the miss shader, when a ray travels through the scene without hitting any objects.

If the ray misses below the horizon (in the negative Y direction), the shader calculates the point at which the ray crossed the horizon, and reflects the ray at that point. To give

a ripple effect, the ray direction is offset at an angle calculated with Perlin noise. Some light is also absorbed to give the water some perceived depth. To prevent underwater objects

from appearing in front of reflected objects, the anyhit shader discards hits intersecting below the horizon.

Instead of implementing a volumetric fog model, which could have slowed down the frame rate significantly, I created a fog effect that gives the general illusion of fog without being completely physically accurate. I simulated a variably-dense fog layer, densest at the horizon, decreasing in density linearly until about 200 meters above the horizon. That way, the fog doesn't completely occlude the sky, but has an effect that lasts long into the distance horizontally. Fog is accumulated per-ray, based on the total distance it travels and the parts of the fog layer through which it travels. The end "fogginess" factor is then scaled logarithmically to give a noticeable effect without becoming overwhelming in the distance. This scaled value is then factored into the final color of the ray.