Markerless Augmented RealityGreg Eddington

Project Goals

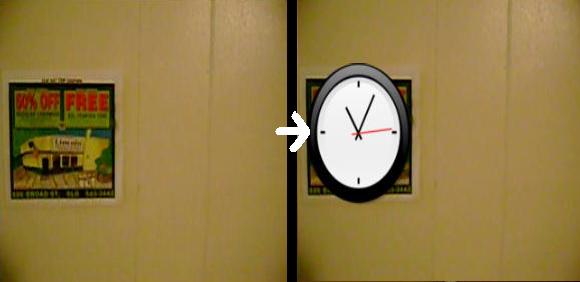

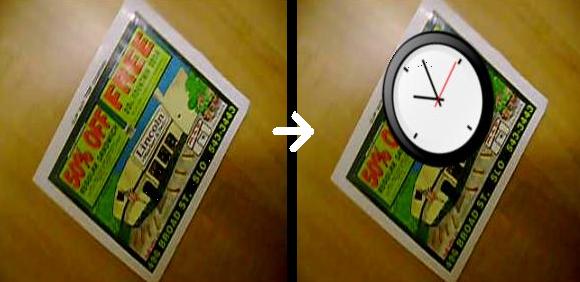

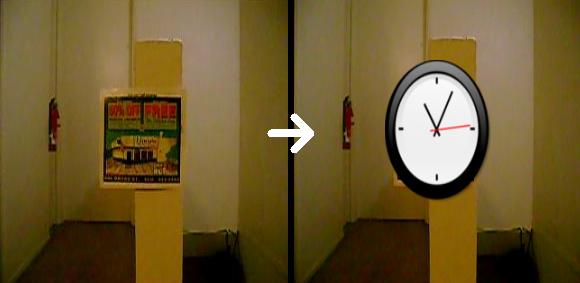

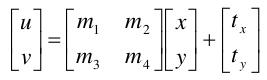

DescriptionThe concept of the algorithm presented in this project is based on the fact that if three points on an affine surface can be found and matched between two frames on a video, then an affine transformation can be found which transforms the points from the first frame to the positions in the second frame using the equation below. This transformation can then be applied to the vertices used to create a virtual image, making it appear to move in the same manner as the surface in the video. This creates the ability to augment the video with the virtual image in a realistic manner, as the virtual image moves in the world in the same way as another object. In order to match three points from a affine surface in one frame to the same affine surface in another, two identification processes must first occur. The first is to define the region of the affine surface and limit the search for points to only that surface. The second is to search that region for points which are unique and distinguishable, so that they can be matched in sequential frames of the video. This project used two previously existing algorithms to perform these two functions. To find the affine surface, the MSER algorithm was used, and to find keypoints within the surface, the SIFT algorithm was used.

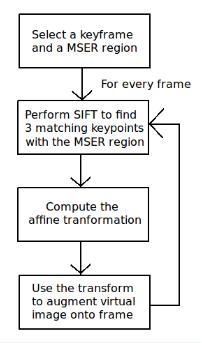

MSER will sort all gray-scale pixels in an image by intensity, and then iterate through ascending levels of thresholds to produce binary black and white images, with the first sequence being all white and the last being all black. It then finds blobs which are produced during the thresholding processes, and marks blobs which maintain shape for a certain number of thresholds as a stable region. These blobs are then abstracted with an ellipse, which is used to constrain the search space. SIFT begins by taking a frame and creating several blurred version of it using a Gaussian blur, to increase the algorithms invariance to scale. Difference of Gaussiance (DoG) images are then created by subsracting adjacent images in the Gaussian blurring process, to approximate a Laplacian of Gaussian and produce an image that is invariant to image changes. The maxima and minima pixels are then found by iterating through all pixels and looking for pixels which are the maxima or minima of the 26 adjacent pixels (8 neighbors in the current DoG, 9 neighbors in the DoG produced by the previous level of blurring, and 9 neighbors in the DoG produced by the next level of blurring). These pixels are now considered keypoints, and are further analyzed to filter out noisy keypoints. The contrast of the keypoints with their neighbors are analyzed, and low contrast points are removed. Poorly localized points are then filtered out by removing points which have a high principle of curvature in one direction, but not in another, suggesting that it is lying on an edge of a surface. A 128 element vector key is calculated for each point by calculating a histogram of neighboring pixels. ImplementationA high level flow of the algorithm is shown below. The keyframe from which augmentation is relative to is the first frame of the input. The user can select a MSER ellipse to use as the augmentation surface. Before augmentation occurs, every frame is loaded into memory and the MSER and SIFT algorithms are performed on every frame. This is not necessary, as only the initial keyframes requires the MSER algorithm, but being able to visualize the MSER ellipses across all frames proved useful when implementing the algorithm. In a real-time system, MSER should only be performed until a keyframe is selected. Similarly, only the keyframe and final frame are needed for augmentation, but storing every frame was useful for analysis. Once the keyframe is selected, then a SIFT search is performed on it to find all keypoints within the selected MSER ellipse. For every subsequent frame, a SIFT search is performed to find all keypoints within the image. Using the 128 bit key, each keypoint is compared to each keypoint within the MSER ellipse of the original frame, calculating the distance between the two keys. Keys below a defined threshold are considered matching, and all others are considered unmatched. The three best matching keypoint pairs are chosen for computing the affine transformation matrix. This augmentation is stored for the given frame, and the process is repeated for every frame in the sequence. The user is able to cycle through all the frames of the input, and can turn on and off various view controls. The MSER ellipses can be shown as blue ellipses and the SIFT keypoints can be shown as red glyphs. The ellipses and keypoints can also be narrowed down to the selected ellipse and matching keypoints. The user can also toggle augmentation on and off. Cycling through all frames with augmentation on allows the user to view the augmented video. Results

Download

How To Useusage: aug <input> <image> <target-ellipse-x> <target-ellipse-y> <aug-x> <aug-y>

Controls

Future WorkI plan on continuing this work for my senior project. The main goal will be for real time augmentation, which will require two changes to the current program. The first is to change the input source for the program from a series of image files to a webcam using a library designed to continuously pull frames from the camera. The second is to profile and optimize the actual augmentation algorithm so that it can run at an acceptable rate for a real time graphics application.The second main goal of the project is to create a framework which provides a set of tools for programmers to incorperate markerless tracking into their programs. This entails making parameterization of the algorithm easier, providing a simpler interface for selected a source to augment, and providing an API so that users can incorperate the library into their code. |