The philosphy of the language seems to be "safe by default." For example, like C++, rust has compile time const/mutability checking. But in rust, variables are immutable by default, instead of in C++ where you have to remember to mark a variable as const. On the runtime side, unlike C++, rust checks array bounds on indexing. C++ emits these checks for performance, but rust takes the stance that you don't need that performance in the majority of cases. For the cases that really need the extra performance, rust provides an "unsafe" method of indexing arrays without the check.

Rust claims to be a systems programming language, but sometimes it feels like a high level language. Some of the first things I noticed upon learning rust were the type inference and pattern matching. As I started to architect my program though, I started to fall into a mix of an object oriented design for data storage, but a functional programming design for data flow. I think my desire to use a functional programming approach stemmed from the immutability by default. I found my self thinking carefully every time I wanted a mutable variable and often deciding that I could do the same thing without one. Rust provides many functions that operate on iterators to "map" data, "filter" it, and finally "fold" the iterator into a result when the application calls for it. (It turns out you can do this in standard C++ as well, but I hadn't heard about it until I went looking for it.)

I didn't make a comparable solution in another language, and I don't have any benchmark for how fast photon mapping should be, so I don't know how well my program written in rust performs. That said, it doesn't feel slow.

The release of version 1 marked the commitment from the community for future versions of the language to be backwards compatible. In order to do this, they marked any features they didn't want to commit to as "unstable" and compiler will not use them unless you are on the nightly build. Lots of base functionality is there, however I found myself wanting to use features marked as "unstable" multiple times, often giving up on having the functionality (in the case of threading) or having to implement it myself (in the case of finding min/max or sorting floats)

One of the tradeoffs for all of the extra compile time saftey, is compile time complexity. Now part of the problem is probably just that this is a new language for me, but, I'm sure some of the difficulty is just the price of admission. I feel the benefits are worth it, but it was annoying nonetheless.

As part of the safe by default philosophy, there are no implicit type conversions in rust. The syntax for conversion is nice, but you have to use it every time. There are a few other little things like this and they start to add up.

I had a hard time finding tools like a profiler for rust. I'm not too surprised because it's such a younge language, but it worth noting. Especially for a language designed for performance and whose philosphy is to only do dangerous optimizations when you really need them, it would be nice to know which parts of the code need that.

The classic implementation of photon maps has a global photon map for the entire scene. At the point when the photon map is being sampled though, the ray tracer has already done a lot of the work by finding out which object it hit. By storing separate separate photon maps for each object, I hoped to leverage the raytracing and reduce the time needed to search for nearby photons.

I see some additional benefits to storing the photons this way. First is that we can now use a 2d data structure mapped to surface of an object instead of using a bigger 3d data structure. Another benefit is that it solves the problem of photons on the surface of other objects contributing to the radius of an object in corners.

The original developers of photon mapping advocate the use of a KD-tree to store the photons. This recomendation makes sense for a global photon map, but since I now store a photon map per object, we can narrow down the search space to the surface of an object, and a KD-tree seems like overkill.

Because my program only supports spheres, I wanted to tailor the datastructure for the surface of a sphere. Spatial partitioning (like KD-tree) still seemed like the way to go and I wanted to split the surface like longitude/latitude does (though unaligned to avoid aliasing at the poles). I was lazy though and didnt want to map coordinates to sphere coordinates, so instead, keeping in spirit with KD-trees, I used planes to partition the surface. By having the planes intersect the center of the sphere, they are reminicent of lat/long, and I believe I still take advantage of the knowledge that the photons lay on the surface.

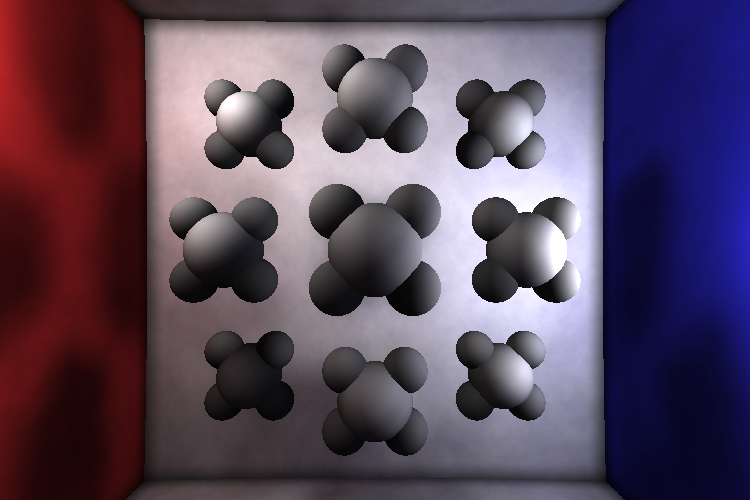

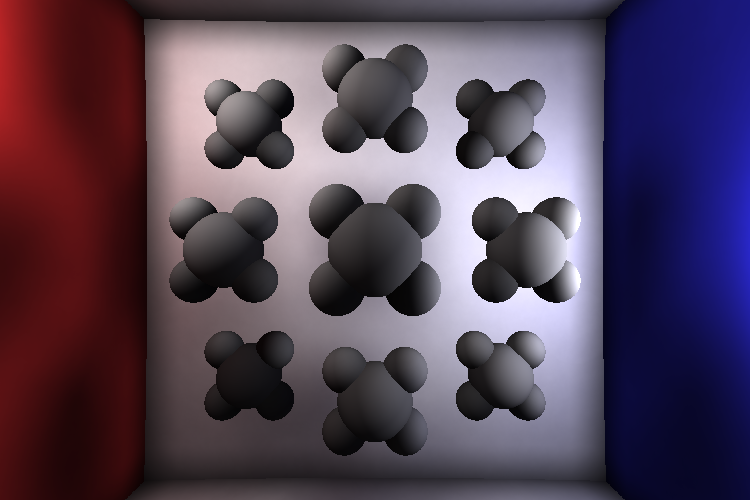

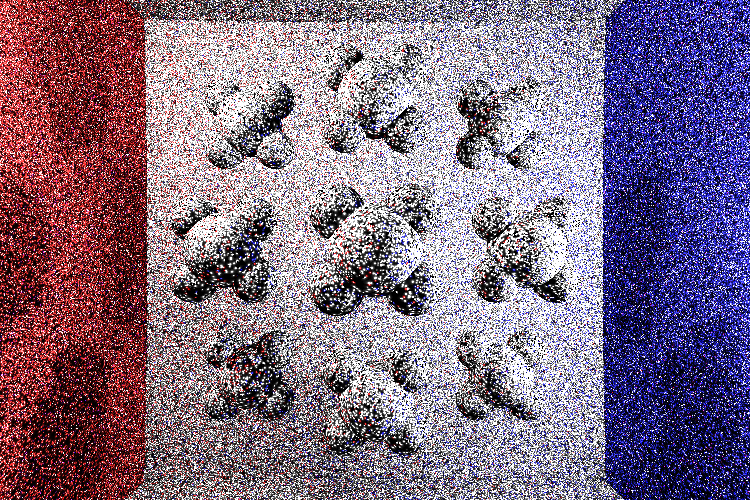

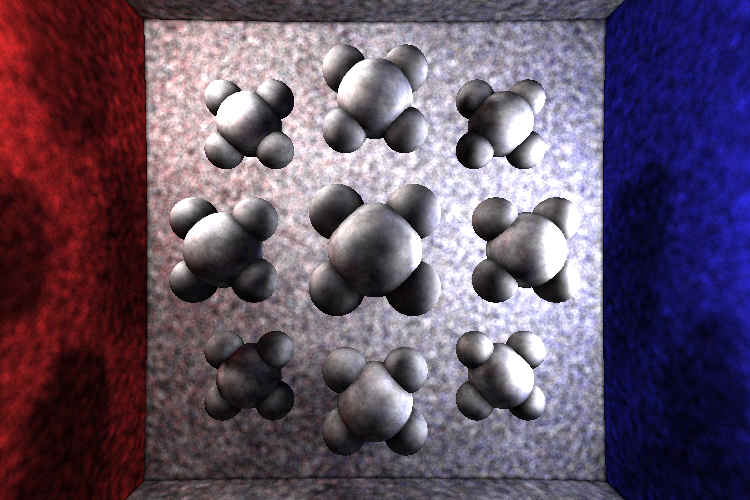

Photon maps are queried for every ray cast during rendering. This makes this algorithm critical to performance of the program. I started by implementing an N nearest neigbors algorithm as suggested by the original creaters of the photon map. This seemed ok performance wise for small a small N (on the order of 10), but the renderings were very noisy, regardless of the number of photons with < ~1000 photons sampled, and my N nearest neighbors algorithm could not scale that high. So instead I scrapped nearest neighbors and implemented a radius search, returning all photons within a certain radius of the search point. This implementation gave the rendering an order of magnitude speedup, allowing it to sample enough photons to elliminate the worst of the noise.

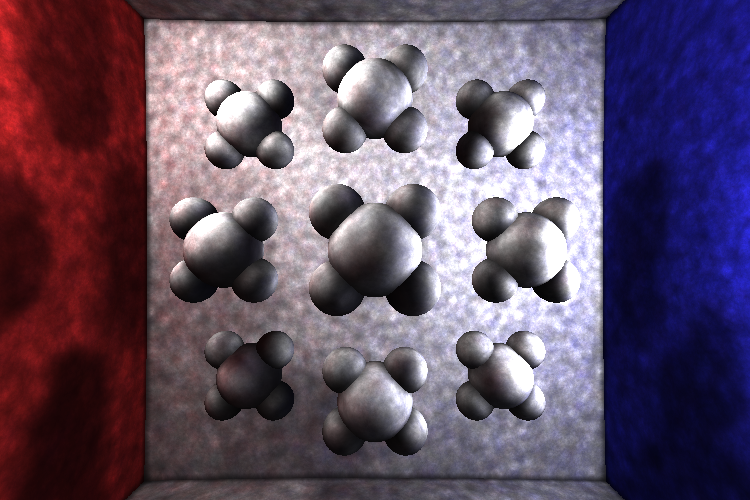

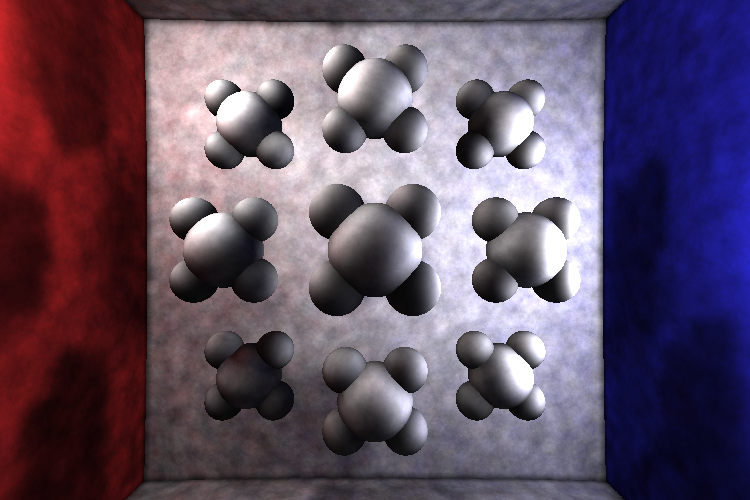

Sweet spot is somewhere is here. Too few photons and its noisy, to many and its blurry.