Photon mapping is a rendering method involving shooting "photons" into a scene in order to generate an image. The photons are generated at any and all light sources in your scene and can be traced into the scene using ray tracing or path tracing methods. As the photons hit objects in a scene they are stored in a photon map for later access. After all the photons are cast, the scene is rendered using ray tracing, calculating lighting components using the photons.

For my specific implementation I render a scene with a single, point light source. I cast a number of photons into the scene, in random directions, originating at the light. As the photons come into contact with objects in the scene a photon object is created and stored in a list. The object contains the collision position, the incident direction, the color of the photon(initially the color of the light), and the photon's power. After that I determine whether or not the photon reflects using a Russian Roulette technique and comparing against the diffuse value of the surface's finish. If the photon reflects then it picks up the color of the surface and reflects in a random direction about the normal of the surface at the point of collision and is cast into the scene again to repeat the process.

After all the photons are cast into the scene I generate a balanced kd-tree of the photons, followed by the rendering steps. For rendering I use a traditional ray casting method to find points in the scene and then calculate the ambient, diffuse, and specular components of color at a point to determine what color each pixel should be. The ambient and specular components are calculated the same as traditional ray tracing. The diffuse component however, is calculated using the photon map. For each point in the scene I look up k nearest photons in an area around the point and generate the diffuse color by summing the contribution of all of the gathered photons. Each contribution is calculated by multiplying the color, power, and incident direction dotted with the surface normal, of each photon. For my specific implementation I needed to lastly multiply the diffuse color by a flat amount in order to see a significant contribution to the scene color.

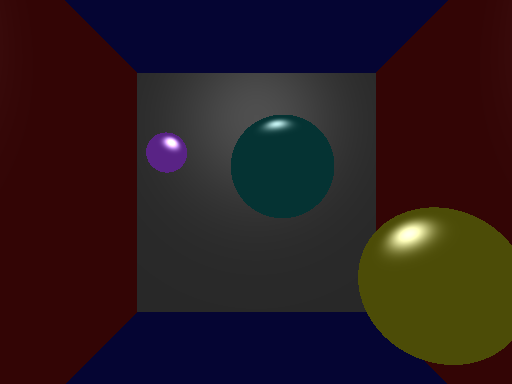

The two images above are done with the original raytracing. The left image is a full raytraced image, and the right image has the diffuse component removed.

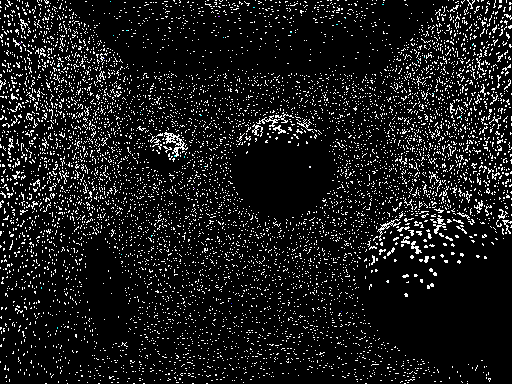

The above image is the photon map for the scene. This is created by gathering at most one photon per pixel and drawing it's color.

The two images above are examples of scenes drawn with the photon mapping. The left image had 500000 pixels cast into the scene, resulting in approximately 2.2 million photons after reflections. At each pixel 150 photons were gathered for their color contribution. The photon casting step took less than five seconds, however the rendering step took just under ten minutes.

The right image had 10000 pixels cast into the scene, resulting in approximately 33000 photons after reflections. At each pixel 100 photons were gathered for their color contribution. The photon casting step took about two seconds, and the rendering step took between three and five minutes.

The above image had 10000 photons cast into the scene and 100 gathered at each pixel. The difference between this image and the image above it is the scale used to boost the diffuse contibution. This image has four times the boost scalar used in the image above it. This gives the image the distinct circles in the scene. Each of these circles is centered at a single photon. This helps to view the color bleeding generated from the reflected photons.

A main piece of work to still be done on this project is to decrease the run time. Even with using a balanced kd-tree for photon lookup the rendering step still takes significantly longer than other implementations. Additionally, color bleeding is still an insignificant contribution to the scene and could benefit from additional work. Lastly, many photon mapping implementations support reflective surfaces and caustics, both of which would be good to add to this implementation.

A big thanks to Drew and Cody for helping me work through some bugs the night before the project deadline.