Background | Previous work

Volume visualization has been an active area of research during the past 20 years. In order to visualize the data efficiently and precisely, volume visualization techniques must offer the following characteristics:1. Understandable data representation

2. Quick data manipulation

3. Reasonable rendering times

There are four classes of classical volume rendering algorithms:

1. Ray Casting

A ray is casted through the volume for each image pixel and the color and opacity values along the ray are integrated [2]

2. Splatting

Contribution of a voxel to the image is computed by distributing the voxel's value to a neighborhood of pixels [2]

3. Cell Projection

The volume is decomposed into polyhedra whose vertices are the sample points. Then the polyhedra is sorted by depth order

and the polygonal faces are scan converted into image space. The color and opacity at each pixel are computed between the

front and back faces of each polyhedron [2]

4. Multi-pass resampling

The entire volume is resampled to the image coordinate system so that the resampled voxels line up behind each other on the

viewing axis in image space. Then the voxels are composited together along the viewing axis as in a ray caster [2]

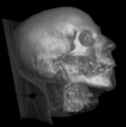

This family of algorithms are robust and good at representing the data in an understandable manner. Yet, the large 3D arrays of data must be sampled and therefore are computationally expensive. As shown in figure 1, a dataset of a human head could contain 15 million samples. Iterating through all these samples in a 3-Dimensional dataset is computationally costly and is typically O(n^3).

Given enough rendering time, processing power and high memory bandwidth, these datasets can be easily rendered. Yet, two of the characteristics, quick data manipulation and reasonable rendering times, cannot be achieved and does not allow for interactive applications. In order to cover the latter characteristics, algorithmic optimizations that exploit coherence in the data can be implemented, thus achieving high rendering rates and allowing for interactive applications without the need of special hardware.

This project has been designed and implemented to model the "Bubble model" algorithm, which allows for understandable data representation, quick data manipulation, and reasonable rendering times. Understandable data representation is accomplished by implementing the "Bubble model" algorithm and then combining it with Ray casting to do surface shading. A graphical user interface is created to allow the user to change various parameters such as opacity, threshold values, and rendering techniques.